Sometimes it pays to think twice about the recommended approach to achieve your ends. Especially when it comes to performing major upgrades to your applications most crucial system… the database.

Setting the Scene

I have been working for a number of years now, on a project for a client that uses Amazon Aurora Serverless (MySQL). About a year and a half ago, Amazon announced Aurora Serverless V2 and started encouraging everyone to upgrade their workloads. For us there were a few blockers to doing this.

Firstly, we made extensinve use of the RDS Data API for executing a suite of integration tests and other developer tooling. While AWS managed to deliver that in a timely manner for PostGreSql, it was not seen as a priority for MySQL, and as a result, only became available at the start of October last year. With that obstacle removed, as well as the recently announced “auto-pause” (which finally meant that Aurora Serverless V2 was actually … well … servereless), it was time for us to embrace the future.

The Recommendation

At this point, I started scouring the web for a recommended approach, and kept finding this interesting recommendation that used the new blue/green deployment methodology to allow you to upgrade and then test your database, and then when happy, fail over to the new V2 instance with very little downtime. This seemed interesting, so I started to plan our migration. The issue I quickly found was that this wasn’t going to play well with our very elegant Infrastructure as Code (IaC) approach to provisioning all of our infrastructure, including the database. Executing the instructions proposed, would in fact have created such significant drift in the stack that it would be no longer usable.

This also highlighted another problem that I had suspected would be an issue from early on in the project. When the project started out, we had a few lambdas, an API gateway, a database and a step function or two, along with supporting roles, policies etc… For a while now, I had been wanting to extract out the database IaC from the rest of the application to support simpler disaster recovery models, and allow for a more de-coupled architecture.

We were also lucky enough to have the luxury of allowing for a decent outage window for the upgrade, so were not necessarily needing to go the blue/green deployment route. Given we had been stuck on Aurora Serverless V1 for so long, and the MySQL 5.7 version of the engine, this was going to be a large and risky upgrade that was going to require significant quality assurance, as such, we were very glad when AWS extended the end of life for Aurora Serverless V1 until the end of March 2025. We also knew that whatever change we wanted to deploy to production would have to go through a CAB process, so we needed to be able to demonstrate that we had done sufficient testing for such a major change and had a roll-back plan in place.

Our Approach

Extract Database Cloudformation from the Main Application and Change the Encryption

The first thing we had to do was to create a completely independent cloudoformation script for the database. When we first embarked on this project, we wanted everything in a single cloudformation script for simplicity. However, it quickly became clear that this tightly coupled the database to the application which made certain disaster recovery scenarios a little trickier. As such we moved all database related resources; secrets, dashboards, security groups, and of course the database cluster itself, into a stand-alone cloudformation script.

Using a combination of cloudformation parameters and conditions we were able to implement a solution that ensured we could still use the existing script that deployed V1 for existing environments while we tested the new scripts and the upgraded database. This was essential as development continued on other parts of the application.

We also wanted to address another issue we inherited from the early days of the project. When we first started out, we used the default RDS encryption for our databases. This proved to be limiting as it made it difficult to move databases around given we had separate AWS Accounts for different environments. As such, we also wanted to change to use a customer managed KMS key to better support moving databases between accounts. This presented us with a perfect opportunity.

Script the Entire Upgrade

If we only had to do this upgrade 2 or 3 times, we probably would have been ok doing it manually, but as a rule of thumb, I like to say that if you have to do the same thing more than 3 times, automate it…. One needs to apply some common sense to this rule, for instance, if automating something is 10X harder than doing it manually, then perhaps doing it manually 5 times with a detailed run sheet, is ok, but we had ~40 databases we needed to upgrade. 40 you say??? How could you possibly have 40 databases for a single project?

We use a technique for development and integration testing that I like to call Isolated Stacks which gives each developer a dedicated complete environment to use for their own development purposes, as well as providing a full environment for running automated integration tests. Obviously, this suits the serverless model well, as each stack essentially costs cents to operate. These isolated stacks are so useful, developers often spin up 2, 3 or even more of them. It also proved invaluable when we decided to split our integration tests up in order to run them in parallel to speed up our deployment pipeline. We ended up with 5 separate stacks each running a portion of our integration test suite taking the time to do this from almost an hour down to around 20 minutes. This proved so useful that developers decided they wanted their own suite of integration test stacks in order to validate their changes before they integrated them into the main code branch. This is how we got to around 40 database for 4 developers.

The plan was to write a script to handle every step of the upgrade. This script used the AWS cli to perform the following:

- Test that the stack is currently in an upgradable state (application specific logic)

- Ensure the latest cloudformation script is deployed to the stack/environment

- Modify the existing Aurora Serverless V1 database to be in provisioned mode

- Perform a major version upgrade of the MySQL Engine to 3.08.0

- Take a snapshot of the database

- Copy the snapshot using a customer managed KMS key

- Deploy the new database cloudformation creating an Aurora Serverless V2 DB cluster using the newly created snapshot

- Re-deploy the application cloudformation pointing it to the newly created database

- Run a suite of tests to validate that everything is still working against the new database.

This approach allowed us to test our upgrade script on targeted databases and validate the application in isolated stacks. We were also able to execute our integration tests on either V1 or V2, allowing other developers to develop, test and deploy features into production having confidence that they would work on either database.

Conclusion

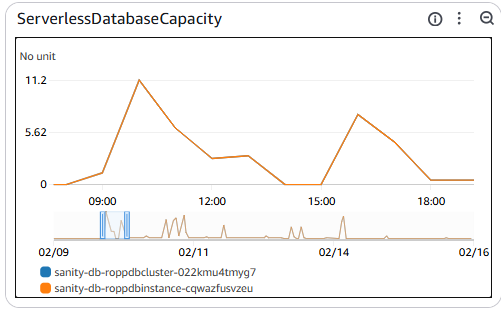

We have now migrated all but our production database (which is scheduled to be upgraded this coming week), and have achieved all of the objectives we set out to accomplish in the process. The result is that we now have a highly available Aurora Serverless V2 database backing our application, and the auto-scaling is vastly improved from Aurora Serverless V1. Below show the way it seamlessly scales throughout a day to support our pipeline integration tests

The conclusion to all this is that sometimes, the recommended approach isn’t always the best one for every situation. Make sure you do things with intention and understanding rather than just blindly following the “best practice”.