The AWS .Net serverless blueprints are a great way to get started coding your serverless application.

My personal favourite is the serverless.AspNetCoreWebAPI which sets up an AWS Web API complete with controllers to show you how to start coding. For a quick proof of concept, or some internal experimentation,

this is a perfect starting point. However, before you deploy your fancy new serverless application into a production environment, and expose it to your end users,

there are a few things you should consider doing to harden or “productionize” your application.

In this series, I will show step-by-step how to take the serverless.AspNetCoreWebAPI template and make it ready for production use. Similar approaches can be applied to other blueprints.

You can follow along each step with my github example project. Most of the following recommendations are based on concepts in the

AWS Well Architected Framework which is a set of principles to consider when deploying workloads on AWS.

The serverless blueprints always create a serverless.template SAM template. Personally the first thing I do is

convert this to a yaml format, because I prefer yaml.

But this is just a personal preference. If you are more comfortable with json, then go with what you prefer.

Explicitly Create your LogGroup for your Lambda

By default, the templates don’t create CloudWatch Log Groups for your Lambda, and when this happens, Lambda will automatically create a log group on its first invocation.

This log group will have a default retention period of “Never Expires” which in reality, you probably don’t want. If your Lambda is called frequently, logs could accumulate over time,

and start to cost you just for storage of the logs. It is vital that you are able to set a retention policy on these log groups. You may also want to set different retention periods for different environments.

For example, you may want to set a short log retention for anything in a test environment (e.g. 7 days), but potentially a longer retention period (e.g. 90 days) in production to aid in historic fault analysis.

In order to set the retention period, you need to explicitly create the log group yourself. This will require the name of the Lambda.

If you decide to explicitly set the Name of your Lambda function (not the default), then it’s straight forward

MyLambdaLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: "/aws/lambda/MyExplicitlyNamedLambda"

RetentionInDays: 90

But if (like me) you don’t like explicitly naming cloudformation resources if you can avoid it, then you need to reference the lambda function and extract its name

ProductionizeLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub "/aws/lambda/${AspNetCoreFunction}"

RetentionInDays: 90

To set the retention period for different environments, simply introduce a parameter into your SAM template

Parameters:

LogRetentionPeriod:

Type: String

Description: The number of days the lambda logs are retained for

Default: 7

And then refer to it:

ProductionizeLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub "/aws/lambda/${MyApiFunction}"

RetentionInDays: !Ref LogRetentionPeriod

This will allow you to set it appropriately for your different environments by explicitly providing the parameter when the default is not appropriate.

Note: if you have already deployed and tested the default template before adding the LogGroup resource to it,

you will have to delete the log group manually otherwise the template will fail to deploy saying the LogGroup already exists.

Introduce an Explicit Role for the Lambda

By default in the SAM template the lambda role is set to null, which is a bit deceptive, because under the hood,

the serverless transform actually goes ahead and creates a role and associates it with your lambda. This role will have the following 2 managed policies

- AWSLambda_FullAccess

- AWSLambdaBasicExecutionRole

This is all well and good for being able to start coding using Lambda, but very quickly you’ll need to start adding different permissions depending on what you are doing.

Also, there are many permissions you don’t need or want in these managed roles. Worst of all, the permissions apply to all resources in your account, and any other account

that has explicitly granted access to your account because of the Resource:'*' in the managed policies.

It is far better to explicitly set the Role property of your serverless function explicitly, and create your own role with very fine grained policies exposing only the

resources the Lambda needs to do its job. This is called the “principle of least privilege”, and is part of any good security in depth approach.

The idea is that if your Lambda is compromised with some kind of arbitrary code execution vulnerability, the attacker is limited to only the resources the compromised lambda has access to.

Of course neither you nor I would ever be careless enough to allow an arbitrary code execution vulnerability in our code, but what about the various dependencies you include? Any one of them

could have such a vulnerability, or may in a future version you upgrade to down the track.

To do this, create a role like this

MyLambdaApiRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

-

Effect: Allow

Action: 'sts:AssumeRole'

Principal:

Service: lambda.amazonaws.com

Then set it in you serverless function like this

MyApiFunction:

Type: AWS::Serverless::Function

Properties:

Role: !GetAtt

- MyLambdaApiRole

- Arn

Once you have done this, you can then start explicitly assigning permissions to this role. Obviously the first one you want to start with would be permissions to write to the CloudWatch logs. Given that you have already explicitly created the log in this SAM template, you should be able to get away with the following

CloudWatchLogsPolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: CloudWatchLogsPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

-

Effect: Allow

Action:

- 'logs:CreateLogStream'

- 'logs:PutLogEvents'

Resource: !Sub 'arn:${AWS::Partition}:logs:${AWS::Region}:${AWS::AccountId}:log-group:/aws/lambda/*'

Roles:

- !Ref MyLambdaApiRole

If you are explicitly naming your Lambda, you can refine the resource even further to the LogGroup name as it will be …:log-group:/aws/lambda/<LambdaName>/*.

If you are not explicitly naming your Lambda, then trying to reference the Lambda resource here will cause a cyclic dependency, so this is where I choose to break it.

You can actually go a bit further in this case, by understanding the way cloudformation automatically names unnamed Lambda functions. This is done by using the stack name,

the logical id of the function and a series of random characters. So you could do something like this …:log-group:/aws/lambda/${AWS::StackName}-MyApiFunction-*,

although you may need to be careful going too far here as the naming rule changes slightly as you approach the maximum allowed name length for Lambdas.

It should be safe enough to include just the stack name like this …:log-group:/aws/lambda/${AWS::StackName}-*. At this point an attacker could only write to other

Lambda logs provisioned in the same stack.

Increase Memory Size Default

256MB may well be a decent default for the MemorySize for other runtimes, but as I have blogged about

many times, for the .Net runtime, it’s not necessarily the best choice.

Unless you know for sure that your lambda is going to be very much IO bound, and you really don’t care about cold start time, set the MemorySize property to 2048MB,

and return later to do some serious analysis of your production workload before adjusting it any differently.

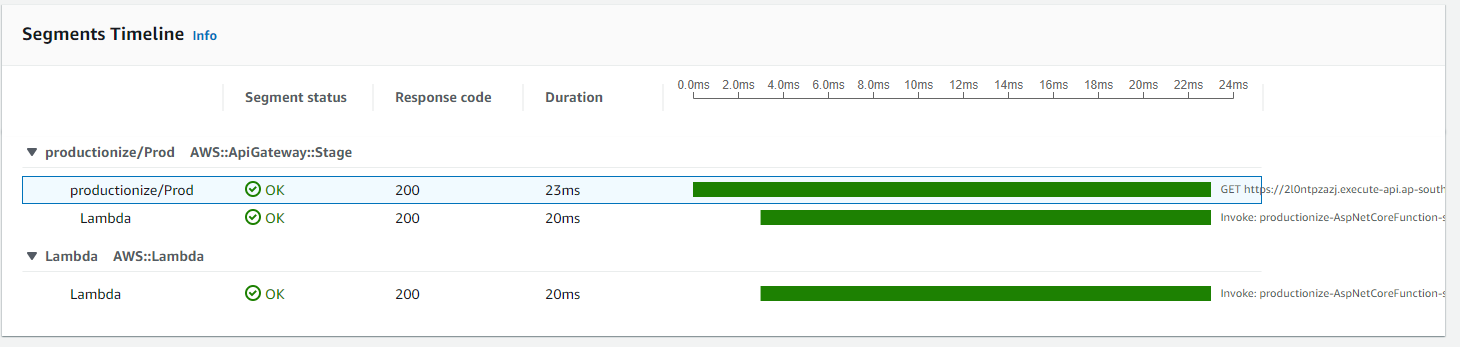

Add AWS XRay for all services

AWS XRay is great for understanding what is going on in across your distributed application. Add it to your Lambda code by adding the AWSXRayRecorder.Handlers.AwsSdk package to your project,

and then including the following static constructor in your LambdaEntryPoint class

static LambdaEntryPoint()

{

AWSSDKHandler.RegisterXRayForAllServices();

}

At this point, you may wish to explicitly define your Api as well by introducing an AWS::Serverless::Api resource, and linking it to your lambda like so:

Events:

ApiProxyResource:

Type: Api

Properties:

RestApiId: !Ref MyGatewayApi

Path: /{proxy+}

Method: ANY

This will enable you to set the TracingEnabled: True property on the Api resource, and take full advantage of Xray in API Gateway.

MyGatewayApi:

Type: AWS::Serverless::Api

Properties:

StageName: Prod

TracingEnabled: true

Add Amazon CloudWatch Lambda Insights

CloudWatch Lambda Insights allows you to monitor a number of metrics you don’t get out of the box. Metrics such as CPU time, memory, disk and network usage. It also gives you diagnostic insights into cold starts, and Lambda worker shutdowns. This can be very useful when debugging any issues that arise.

This is added to your project by including a Lambda Layer like so

Layers:

- arn:aws:lambda:ap-southeast-2:580247275435:layer:LambdaInsightsExtension:21

Note: this arn is region and architecture specific, so be sure to check for the correct layer: x86-46 and ARM64.

You will also need to add a new managed policy to your role

ManagedPolicyArns:

- !Sub 'arn:${AWS::Partition}:iam::aws:policy/CloudWatchLambdaInsightsExecutionRolePolicy'

You should now have access to the CloudWatch Lambda Insights tab for your lambda.

More to Come

In Part 2 I will go through some more ways of productionizing your lambda, so make sure you check it out.