AWS CI/CD Tooling – I’ve Seen the Light

Although I am a big fan of almost all things AWS, I have always been a little critical of their CI/CD tooling, CodeBuild, CodePipeline and CodeDeploy. Having used many CI/CD tools such as Azure DevOps (formerly VSTS), Jenkins, Hudson, BuildKite, and a few more, I felt that I was in a position to judge AWS’s offerings. Until this week, I would have made statements like “their offering lacks the maturity of their competitors”, or “there are some significant gaps”. This week, however, while discussing a fellow colleague’s approach to AWS CodePipeline, I had what can only be described as a “Come to Jesus” moment… or perhaps a “Come to AWS” moment.

As a consultant, I’ve worked on many different types of applications in my career. Thick client single user applications, large scale commercial public facing web sites, native mobile apps, even Palm Pilot applications (for those who remember that era). More recently, I have been primarily focussed on public cloud applications that include a mix of IAAS, PAAS and serverless. Each different application type places different constraints and practices around how you approach building, testing and delivering your software.

It hit me when I understood that AWS use CodePipeline themselves to deliver many of the services they offer. Accompanying this realisation was the epiphany that AWS CodePipeline was in fact a highly opinionated CI/CD tool that is optimized for quickly delivering public cloud based API-driven microservices across the globe at scale.

AWS (on the whole), aren’t into writing thick client applications that have a 3 month release cycle. So it’s no surprise they didn’t design their tooling to (easily) support a branching strategy like GitFlow. Also, most developers who are thinking of using AWS CI/CD tools, are most likely writing software that targets AWS. As such, there doesn’t seem a lot of point putting a huge amount of effort into supporting application styles and delivery models that don’t target AWS. This is a point of differentiation for the AWS tooling. All of the other CI/CD tools have to be flexible enough to support every possible application type. AWS can simplify their offering to focus on their core use cases. Deploying highly available, highly scalable AWS based applications.

Let me walk you through some of the implications of this.

Trunk Based Development

There are many different branching strategies that help developers tackle the complexity of concurrent development and their unique application delivery requirements. When truly practicing continuous integration/continuous delivery, it’s almost impossible not to gravitate towards some form of Trunk Based Development. Allowing long-lived feature or maintenance branches very quickly raises the risk of deployments going south. More importantly, developers begin to spend more time addressing merge conflicts, often with little context of the changes they are trying to merge. This is known colloquially as “merge hell”, and for good reason. Even within Trunk Based Development, there are a few different approaches, but one common approach is to release directly from the trunk. This seems to be the approach sanctioned by AWS.

CodePipeline is specifically tied to a named branch in your source code repository. This to me seemed like a huge limitation. Other tools are not so hampered. But if you are practicing Trunk Based Development and releasing directly from your trunk, this should never really be a concern. This also encourages a “fix forward” approach to addressing broken builds or bad deployments.

If a team is trying to use CodePipeline and not practicing Trunk Based Development, or not releasing directly from the trunk, then they will find themselves creating crazy amounts of CodePipelines that are essentially identical but targeting different branches. This has the potential to allow for deployment differences to creep in which adds yet another source of potential issues. My colleague who was the inspiration for this article, solved this fairly elegantly by using S3 as the source stage of the pipeline, essentially de-coupling it from version control branches. Lambda was then used to kick off the pipeline on check-ins to source control. This forced much of the actual deployment work into CodeBuild, which had some advantages as developers could easily change the deployment processes by updating the buildspec.yml file. However, this did require some significant munging, and doesn’t play to the strengths of either CodePipeline or CodeBuild.

Trunk Based Development is known to require a certain amount of discipline on the part of development teams. It forces contriubtors to be constantly aware of everything that is being committed to the trunk. it also forces patterns like feature toggling in order to work on large features that require more than a few days to implement. Many people use this as an argument to choose a simpler branching strategy that allow for feature and stabalization branches. For certain types of applications this might make sense. However, I have far too often seen a lack of discipline with branches lead to all kinds of issues. Stabalization branches often lead to a “near enough” attitude for code checkins. Long lived feature branches are highly likely to consume significant amounts of integration time, and risk introducing bugs. AWS choice to be opinionated about Trunk Based Development is warranted in my opinion.

Build Once Deploy Multiple

An important principal of a mature DevOps process is to be able to build a single immutable set of deployment artefacts, then push them to different environments changing only a set of configuration parameters. This is encouraged in concepts such as Twelve-Factor Apps.

CodeBuild can be used on its own or integrated with CodePipeline. On its own, you can choose to build any branch you want. This gives you the flexibility to do things like Pull Request Builds or building specific commits. The build is defined in a yaml file you commit alongside your code, and thus can be changed by developers as required. When used as part of a CodePipeline, the CodePipeline branch is selected, the artefacts are then stored in S3, and available to the rest of the pipeline as an immutable version of your application.

If you find yourself re-building code before deploying it into different environments, you can no longer guarantee that built artefacts in other environments are exactly the same as those used in your production environment. This can lead to bugs that are really hard to diagnose. Something as simple as not pinning a dependency can cause an application to behave drastically differently if it has been built at different times. Used correctly, AWS CodePipeline will move the exact same built artefacts through your testing environments, and eventually into production, allowing you to apply the confgiuration through environment variables.

Automated Testing

Every manual test standing between code checked into your mainline and its rightful place in production is a form of technical debt.

I’ve been using unit tests since about 2003 when I was writing C++ code. But unit tests only get you so far. A suite of rigorous integration tests might take a while to run but can really save your bacon when trying to integrate other developers work. Rarely have I seen teams with the maturity to go beyond this. UI tests can be fragile and hard to maintain. Load tests require a special skillset, and can be difficult to set up, and costly to execute at production scale. That said, just because something’s hard, doesn’t mean it’s not worth doing to ensure your code can progress smoothly from a check-in on mainline to release in a production environment. This is how AWS operate. No manual checks between reviewed code and production. You can read all about it in the AWS Builders Library (https://aws.amazon.com/builders-library/automating-safe-hands-off-deployments/?did=ba_card&trk=ba_card). Unlike UI based applications, API based applications are fairly easy to automate.

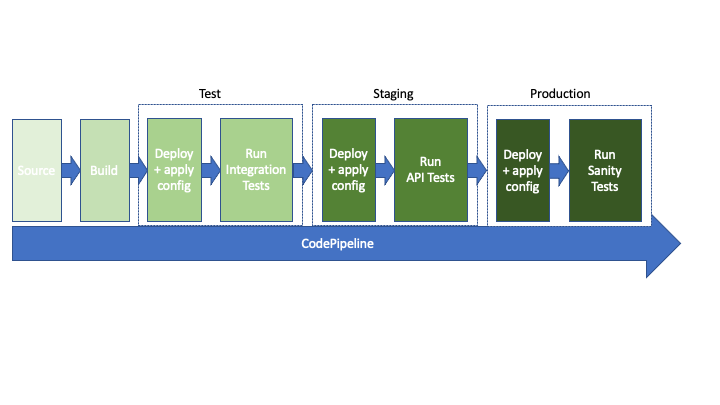

CodePipeline is designed to support moving built code from one environment to the next. A simplified picture would be Test -> Staging -> Production. In Test a set of integration tests might be run against the code. If they pass, then the next stage deploys to a Staging environment which is much more like production. Here Load tests and subcutaneous or API Tests might be run. Finally, if these all pass, the build is pushed into Production. Even then, sanity tests would be a great idea to ensure it still all hangs together. CodePipeline does offer the option of Manual Approval Gates. For a maturing development team, this is a great way to test the water for full blown continuous deployment, without stressing out management too much. It can also be handy if you don’t have confidence in your automated test suite’s ability to detect potential issues, and still require some manual testing.

At the very least, you should have a well-defined promotion process between environments. CodePipeline was designed for this workflow and makes it easy to abort when a build doesn’t meet the promotion bar.

Automated Rollback

While every effort should be made in the automated tests to detect issues, sometimes a change can have completely unpredictable consequences. A classic example is when a seemingly harmless performance improvement exposes a race condition that had been hidden by the slow performance of the old code. These kinds of issues can happen even if you are just applying security hot-fixes to dependent components or frameworks. This is where CodeDeploy’s ability to automatically rollback on configured alarms can be really useful. Coupled with canary deployments, this capability can unwind a bad deploy before it does significant damage.

Writing code that can be safely rolled back requires some serious thought, and a high level of diligence. This is especially pertinent when you are dealing with database schemas which often require a multiple stage approach to safely land in a production environment. Using CodeDeploy and some of its advanced deployment features it is possible to support safe, zero down-time deployments with automated rollback based on alarms. Integrated into CodePipeline, this can be used to fail a deployment and alerting developers. If appropriate automated testing is used, issues may even be detected in pre-production environments. This means the pipeline can be halted before any problematic code hits production.

Tight integration with AWS Platform

AWS have purposely integrated their CI/CD tooling very tightly into the AWS platform. All of these tools can be provisioned with exactly the same CloudFormation style scripts as any other resource in AWS. This not only makes it easier for people who already have experience with AWS tooling, it ensures that other platform assets such as S3 Buckets and KMS Keys can be provisioned and used with them. It also means it’s possible to create templates for standardizing a new teams initial state. The use of IAM Roles to ensure access to resources means that you can apply the principle of least privilege access to the pipeline. While this may take extra time to set up well, it ensures the security of your build process even when doing cross account deployments and provides a familiar experience to AWS developers.

Simplicity and Flexibility

The razor-sharp focus and blatant opinionated approach of the AWS tooling has led to a simplicity in their tooling that is unparalleled by their competitors. AWS are unlikely to consider feature requests that do nothing to improve their preferred workflow. That said, they have integrated CodePipeline with AWS Lambda. Lambda has very quickly become the work-horse of AWS extensibility. If there is a use case you need that AWS doesn’t directly support, the answer is always… “You can spin up a Lambda to do that”. With the power of Lambda, you can shoehorn the AWS CI/CD tools to fit any build and delivery process you can imagine.

Of course, this requires some effort from you. The caveat here is that once you start writing your own Lambdas, you are then tasked with the maintenance of the code running in those Lambdas. Too often teams see these kinds of extensions as “once-off” development tasks, and they are left to atrophy. If you are finding yourself going to great lengths to munge CodePipeline into something it was never designed to do, you might want to take a step back and ask yourself why you are fighting with your tools. Should you perhaps consider a delivery approach closer to AWS’s well-trodden path? Should you be using AWS CI/CD tools in the first place?

Conclusion

Few companies are operating at the same scale as AWS, and few development teams have the level of maturity and discipline to implement continuous, zero down-time deployments at scale. That said, it is important to understand the use case CodePipeline, CodeBuild, and CodeDeploy have been designed to support. Even if you are not operating at AWS scale, many applications and development teams can benefit from the principles that have been baked into the tooling.

Whenever you find yourself fighting against a tool that is designed to make your life easier, you need to take a moment to understand the tools intended purpose and question your own approach. At the very least, if you chose to deviate from the advised path, you should understand why, and do it deliberately, knowing the pros and cons of your decision. After my epiphany, I have a much clearer understanding of why CodePipeline, CodeBuild and CodeDeploy are the way they are. I also have a clearer picture of how I want to use them going forward.