Generative AI coding assistants have been gaining in popularity recently, so I have been trialling a few different AI coding agents myself. Roo Code, Amazon Q Developer, Github CoPilot. So far the experience has been mixed, with certain scenarios costing me more time and energy in fixing the issues they have created than I would have if I’d just done it myself, and at other times, seeing the AI do things a better way than I would have thought to do it. One thing though is clear, you really want to isolate your AI coding agents from the rest of your system. Once you give an agent power to change code and execute commands, you can get into some trouble if you’re not careful, and there have been stories of AI agents wiping entire hard drives. Then there’s always the questions of leaking data. If the AI has the ability to read your code files, what’s stopping it from potentially reading other things on your system. Because of this, it is essential that any serious AI coding should be performed in a completely isolated environment. For Visual Studio Code the best way to achieve this is to use Dev Containers. In this post I’ll go over a few tips and tricks I’ve learnt to set up a Dev Container that is ready for developing serverless AWS applications.

Recommended Tools Installable with Features

I’m generally developing .Net based serverless applications based on the AWS SAM templates provided by the dotnet lambda tooling, so let’s look at a good

setup for that. Firstly, you want to choose a good base Dev Container image. I’ve found mcr.microsoft.com/devcontainers/dotnet:1-8.0-bookworm to be a pretty

good starting point.

Next you’ll want to add some of the missing tooling you need. This can be done either by editing the Dockerfile, or better still using the Dev Container Features.

{

...

"features": {

...

}

}

The first one is the dotnet cli. By default the suggested image above comes with a version of the dotnet cli already installed, but if you don’t use that image, or need a newer version, then you can install it by adding the following feature

"ghcr.io/devcontainers/features/dotnet:2": {

"version": "<version>"

}

Next would be the AWS cli, which can be added using this

"ghcr.io/devcontainers/features/aws-cli:1": {}

While some may be happy using bash, my preference is to use powershell core as my default shell for development. Not just because I’m

more familiar with it, but also because of certain modules that I have become dependent on. The great thing about adding the powershell

feature is that it has an in-built way of installing any desired modules. My absolute favourite killer tool I just can’t live without is

posh-git, but there’s also a powershell based

AWSCredentialsManager (I will do a post on this module another time),

that I can’t live without when developing on AWS, especially when managing multiple accounts. I also use

powershell-yaml for when I want to convert json cloudformation files into a readable format.

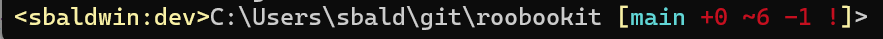

The powershell feature also allows you to specify a url to download your powershell profile from. In this case, I use my powershell profile

to load the posh-git module, and then hack the posh-git prompt to give me feedback about the current AWS Profile I am using (i.e. the

profile any aws cli commands will use if you do not explicitly specify --profile xxxxx in the command).

To add the powershell feature, use:

"ghcr.io/devcontainers/features/powershell:1": {

"modules" : "posh-git, AwsCredentialsManager, powershell-yaml",

"powershellProfileURL": "<url to powershell profile>"

}

My default profile looks something like this:

Import-Module posh-git

$GitPromptSettings.DefaultPromptPrefix.Text='<$env:AWS_PROFILE>'

$GitPromptSettings.DefaultPromptPrefix.ForegroundColor=[ConsoleColor]::cyan

This then gives me a pwsh prompt that looks something like this:

Honestly, I have never seen a better tool for quick feedback about the current status of your git branch than posh-git.

Coupling this with the context of where my aws cli commands are being executed means I can code efficiently and with confidence.

The final missing piece now is the dotnet lambda tools and templates. Unfortunately, when

I first started looking at setting up a Dev Container, there was no feature available for this. One could simply achieve this by

by executing the following in either the Dockerfile (if your base image comes with dotnet cli installed), or in a PostInstall.sh

script (if your base image doesn’t come with dotnet cli):

dotnet tool install -g Amazon.Lambda.Tools

dotnet new -i "Amazon.Lambda.Templates::*"

To me this wasn’t the ideal experience I was looking for, so I decided to write my own dotnet-lambda-feature in order to ensure a better setup process. This project is open source, so please feel free to use it as needed.

"ghcr.io/scottjbaldwin/dotnet-lambda-feature/dotnet-lambda:1": {}

This feature will ensure that the dotnet lambda tooling and the corresponding templates are installed for the remote user (rather than root) of the Dev Container.

There are many other Dev Container features you might like to install depending on what you are trying to achieve, (for example SAM CLI), but the ones mentioned above should give you a good starting point.

Sharing Host Configuration

While it is completely possible to configure your aws profiles and your git configuration in the container itself, my personal

preference is to share my host machines configuration. This way, I can set it up once, and have multiple containers able to use

the same configuration. This also saves you having to re-do the profile setup whenever the container is rebuilt (e.g. by adding

new features or configuration to the devcontainer.json file).

To share your host AWS configuration and git configuration you can use mounts like this in the devcontainer.json file:

"mounts": [

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.aws,target=/home/vscode/.aws,type=bind,consistency=cached",

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.gitconfig,target=/home/vscode/.gitconfig,type=bind,consistency=cached"

],

This should work correctly on Mac, Windows and Linux.

VSCode Extensions

Extensions is what drives a lot of the power of VSCode, and using Dev Containers allows you to install a small targeted set of extensions based on the codebase you are working with. Keeping this list targeted ensures the best performance of your Dev Container, and ensures you can focus better on the tasks at hand. Everyone has their own set of VSCode extensions they can’t live without, but for developing AWS serverless in .Net, this is a good starting point:

"customizations": {

"vscode": {

"extensions": [

"ms-dotnettools.csharp",

"ms-dotnettools.csdevkit",

"amazonwebservices.aws-toolkit-vscode",

"kddejong.vscode-cfn-lint"

...

],

}

}

Miscelaneous

One small trick I like to do is to set up my default AWS Profile like so:

"containerEnv": {

"AWS_PROFILE": "<my-aws-profile>"

}

Of course if you are developing in a team, you might want to agree on a profile naming convention before doing this.

You can use this method to set up any environment variables required for development.

You can also ensure certain VSCode properties are set automatically. Here, I have set up pwsh as

my default terminal, and a few other properties related to .net development.

"customizations": {

"vscode": {

"settings": {

"terminal.integrated.defaultProfile.linux": "pwsh",

"terminal.integrated.profiles.linux": {

"pwsh": {

"path": "/usr/bin/pwsh",

"args": ["-NoLogo"]

}

},

"dotnet.defaultSolution": "<mysolutionfile>.sln",

"omnisharp.enableRoslynAnalyzers": true

}

}

}

Again, if working in a team, you need to ensure that these settings are good for the whole team.

Conclusion

Dev Containers are a great way of ensuring you have a consistent development environment across a team, while also creating an isolated environment where you can use agentic AI coding tools safe in the knowledge that they can’t do any serious damage or leak any confidential data if they go rogue.

I have provided a starter kit to get you started. Please let me know in the comments if there are any other tools you think belong here.