On Wednesday the 31st of August, I was given the privilege of speaking at the Melbourne AWS User Group on one of my favourite topics, Performance tuning .Net Lambdas.

I thought I would take the opportunity to post a link to the talk, as well as the powerpoint deck, and dive into some of the details in my analysis.

I have posted in the past about the challenges of cold start and lukewarm start in the .Net framework, and how to mitigate these problems. I have also written about another way to reduce this impact by using the .Net feature ReadyToRun. Both of thesee posts were from analysis I cunducted using the .Net Core 3.1 framework. For the Melbourne AWS User Group, I decided to refresh my analysis using the .Net 6 framework, which AWS released lambda runtime support for back in February of this year. Fortunately everything I had written about in the past still applies to .Net 6. so the advice in those posts still stands. However, there was a very interesting subtlety that had alluded me until a few days after the talk.

Because I had simply upgraded the projects used in my previous analysis from .Net Core 3.1 to .Net 6, I had missed a very interesting change in the default templates provided by AWS. Between .Net Core 3.1 and .Net 6, the default templates for AWS Lambda now explicitly turn on the “ReadyToRun” feature.

Making this change now, does make sense. In .Net Core 3.1, if you wanted to enable ReadyToRun you had to explicitly compile on the platform you wanted to target, meaning the only way to

do this for a .Net Lambda was to set up a CodeBuild project using an Amazon Linux 2 build agent. Defaulting this in .Net Core 3.1 would have caused havoc for the local development experience.

This has fortunately been addressed in .Net 6. Like many defaults, this is a really good starting point for most projects,

but may or may not be the best approach for the lambda code you are writing. As described in the other posts, my first pass approach to performance tuning AWS .Net lambdas is to tweak another default… the

MemorySize parameter from the serverless template. 256MB may well be a good default for many other runtimes, but for .Net, not so much, especially when you want to address cold start and lukewarm start times. I have settled

on 2048MB as my preferred starting point for MemorySize for .Net Lambdas, but even that is dependant on what I’m doing in the lambda.

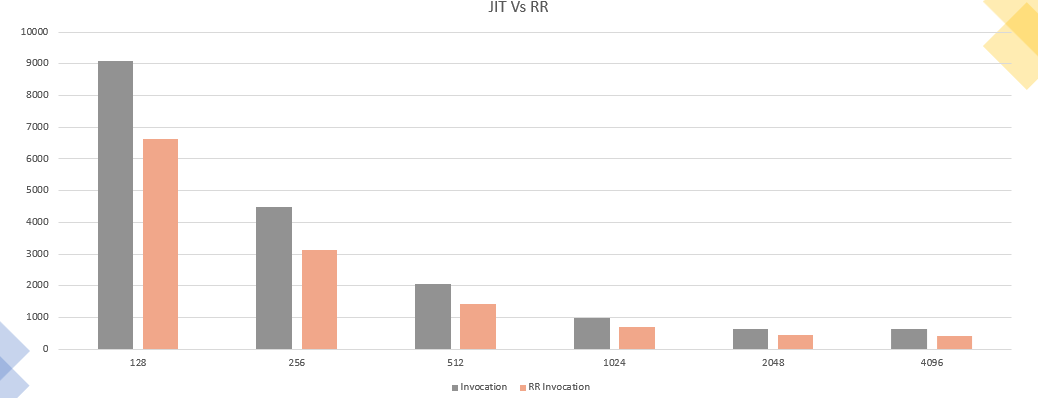

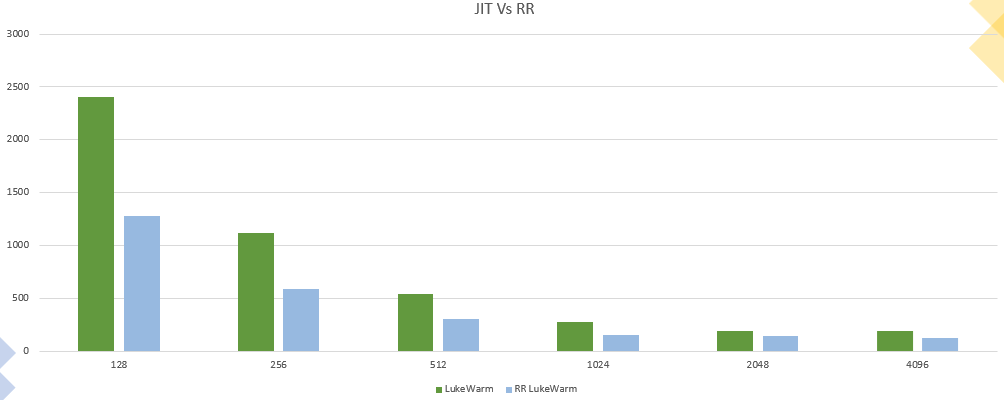

Similarly with the ReadyToRun default, you need to pause and ask “is this right for me”? The benefits are obvious, as can be seen by the performance improvement you gain at both cold start and lukewarm start shown below.

While this looks great, a deeper understanding of the impact of compiling as ReadyToRun highlights that there may be negative impacts on your warm start times due to the fact that AOT compilation cannot be as efficient as JIT, and results in a larger working set. While this can partially be mitigated with tiered compilation, it will never be as good as purely JIT compiled code. It turns out that when it comes to performance, JIT compilation is actually .Net’s super power, even if it’s the predominant cause of the cold start issue. In a future post, I want to dive into some more of the opriizations that can be achieved in .Net 6 lambdas, and put some numbers around the impact of the ReadyToRun option.

A full analysis of this impact, as well as details on other .Net 6 performance improvements can be seen in this post by Stephen Toub from Microsoft. As a rough guide though, where you may want to change this default is when you are writing lambdas that are CPU intensive, and spend the majority of their time executing in the warm state. I think compiling with ReadyToRun is a really good default for probably the majority of lambdas you’d be writing, but if performance really matters, it may pay you to experiment with this option.